This week’s Founder Finds includes:

⚙️ An n8n MCP server

📖 Storytelling in SaaS

🦾 An agentic AI crash course

🧠 How to get your brain to focus

🌴 6 principles of lifestyle businesses

➡️ And more…

🪶 Remember This

Do not cling to a mistake just because you spent a lot of time making it.

🤓 Fav Finds

Tools, tweets and more from Trends Pro Members

🦾 Agentic AI Crash Course shared by Alejandro Arango

A guide to learn agentic AI for real world applications

⚙️ n8n MCP shared by Elia Zane

An MCP server to build n8n workflows with AI

🧠 How to Get Your Brain to Focus shared by Jd Fiscus

A talk on harnessing focus for deeper work and more creativity

🏆 Trends Pro Member Wins

🧿 Dru Riley added weekly competitor summaries to HeadsUp

⚡ Ren Saguil is hosting a series of sales workshops on Maven

💬 Elie Steinbock was featured in an interview on 20i

📺 Alexandre Kantjas reached 30,000 subscribers on YouTube

🎨 Paul Martin made a video on the redesign of Bondex

📘 Read This

Why Do Most Founders End Up Working More, Not Less?

The secret to lifestyle businesses is building systems that work without you:

- Build simple products with no maintenance required

- Price strategically to avoid support nightmares (too low) and consultant dependency (too high)

- Choose uncrowded markets where you can dominate without constant competition crushing margins

🛠️ Tools of the Week

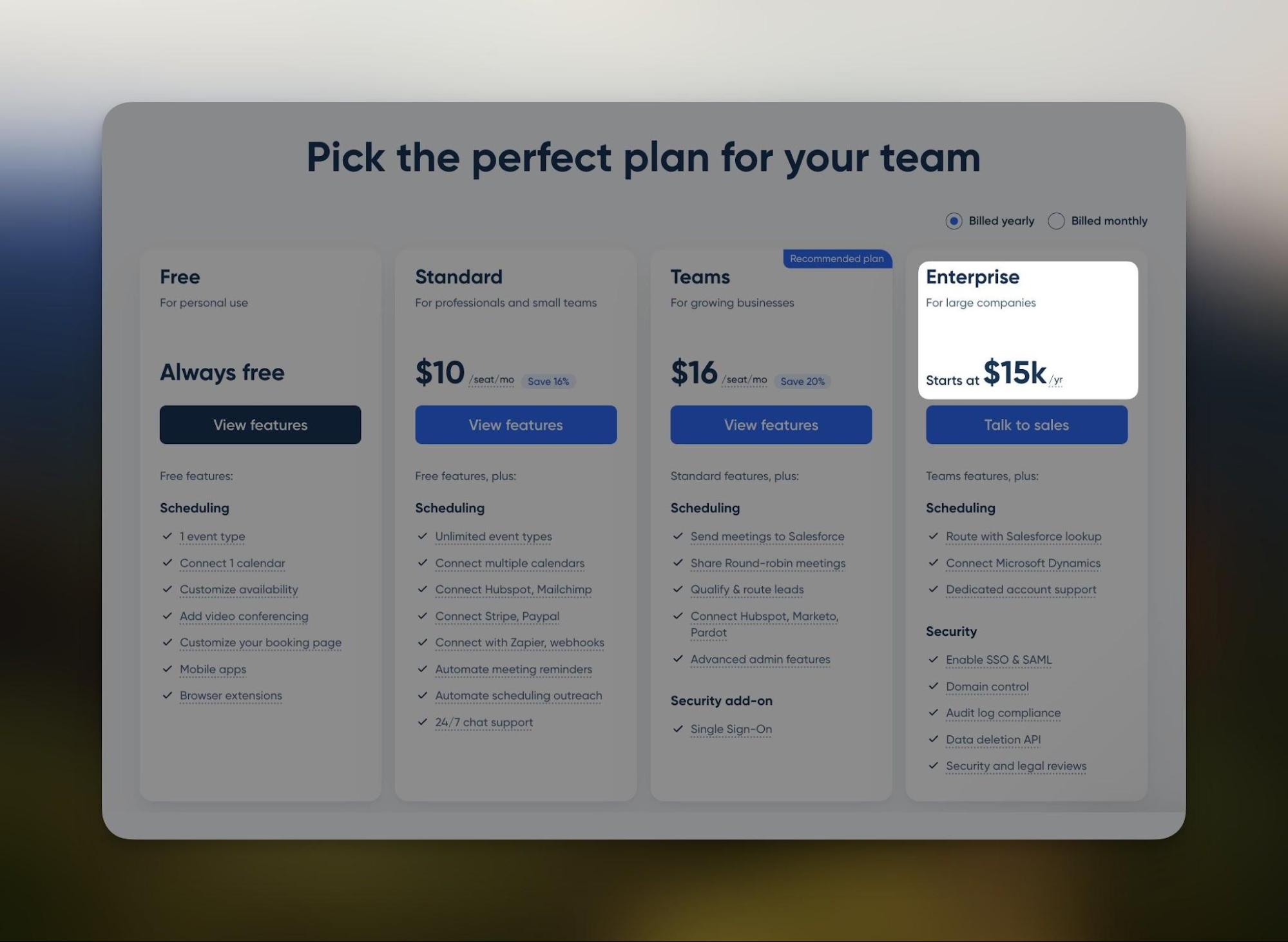

💲 Reply.io — Automate sales outreach

🎞️ Zubtitle — Edit social media videos fast

📊 Julius AI — Analyze your data with computational AI

💬 Join the Conversation

Here’s what Trends Pro Members are talking about…

- (📈 Pro) Best blogging platforms

- (📈 Pro) Building LLM chatbot apps

- (📈 Pro) Best prompt engineering courses

🎧 Listen To

Why Are You Selling Features When Customers Buy Outcomes?

Most SaaS founders pitch “40% faster processing” and wonder why prospects don’t get excited.

Instead, use stories to close the gap between features and outcomes people actually want:

- Build a story bank with customer wins, personal struggles and company milestones. When people see themselves in your stories, they stop evaluating and start buying

- Tell three types of business stories: mission (why you started), vision (what success looks like) and milestones (what you’ve overcome)

- Show what’s at stake, what they want to learn, how you want them to feel and what they should do next

🍢 Trends Tribe

1-on-1 live meetups for Trends Pro Members.

- Make real connections

- Solve problems faster

- Help each other grow

Next meetup: October 20

The Most Popular Link From Last Week:

🎨 Build UI Designs and Wireframes with AI

Get Weekly Reports

Join 54,000+ founders and investors

📈 Unlock Pro Reports, 1:1 Intros and Masterminds

Become a Trends Pro Member and join 1,200+ founders enjoying…

🧠 Founder Mastermind Groups • To share goals, progress and solve problems together, each group is made up of 6 members who meet for 1 hour each Monday.

📈 100+ Trends Pro Reports • To make sense of new markets, ideas and business models, check out our research reports.

💬 1:1 Founder Intros • Make new friends, share lessons and find ways to help each other. Keep life interesting by meeting new founders each week.

🧍 Daily Standups • Stay productive and accountable with daily, async standups. Unlock access to 1:1 chats, masterminds and more by building standup streaks.

💲 100k+ Startup Discounts • Get access to $100k+ in startup discounts on AWS, Twilio, Webflow, ClickUp and more.

Brought to you by the team behind HeadsUp